3 Wilcoxon rank sum

Traditional parametric testing methods are based on the assumption that dat are generated by well-known distributions, and characteristic by one or more unknown parameters. Critical values and p-values are computed according to distribution of the test statistic under the null, derived from assumptions related to the underlying distribution of data.

Nonparametric methods require mild assumptions regarding the underlying populations from which data are obtained. When parametric assumptions do not hold, they are valid, and when parametric assumptions hold, nonparametric methods are only slighly less powerful than parametric.

3.0.1 Two sample T test

\[\begin{equation} X_i \overset{\text{iid}}{\sim} N(\mu_x, \sigma^2), i \le m \\ Y_j \overset{\text{iid}}{\sim} N(\mu_y, \sigma^2), j \le n\\ X_i \text{independent} of Y_j\\ \text{A natural estimate of} \mu_x - \mu_y is \bar{X} - \bar{Y} (MLE) \\ \bar{X} - \bar{Y} \sim N(\mu_x - \mu_y, \sigma^2(\frac{1}{n} + \frac{1}{m})) \\ S_x^2 = \frac{1}{m-1}\sum_{i=1}^{m}(X_i - \bar{X})^2\\ S_y^2 = \frac{1}{n-1}\sum_{i=1}^{m}(Y_i - \bar{Y})^2\\ S_p^2 = \frac{\sum_{i=1}^{m}(X_i-\bar{x})^2+\sum_{j=1}^{n}(Y_j-\bar{Y}^2)}{m+n-2} \\ T = \frac{(\bar{X} - \bar{Y})- (\mu_x-\mu_y)}{s_p\sqrt{\frac{1}{m}+\frac{1}{n}}} \sim t_{m+n-2} \\ H_o: \mu_x = \mu_y vs.H_1: \mu_x \ne \mu_y(\mu_x > \mu_y, \mu_x < \mu_y) \end{equation}\]

m=28; n=30; x=rnorm(m,mean=1,sd=1); y=rnorm(n,mean=1.1,sd=1);

t.test(x,y)##

## Welch Two Sample t-test

##

## data: x and y

## t = -0.32493, df = 54.432, p-value = 0.7465

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -0.6230575 0.4492379

## sample estimates:

## mean of x mean of y

## 0.9324144 1.01932423.0.2 Wilcoxon Rank Sum Test

We have two samples, from two populations (X and Y), and interest is in the difference of the two population means. T.test can be used for one sample and two sample. Wilcoxon is a nonparametric version of the two sample t test. X and Y must be independent. Alternative hypothesis is that X at a given point t is less than the given distribution of Y… in other words, X larger than Y.

3.0.3 Hypothesis

- $H_0 : G(t) = F(t) $, for all t

- $H_1: G(t) F(t) $, X is stochastically larger than Y

3.0.4 Location Shift Model

- \(X = Y +\Delta\)

- \(\Delta\): location shift, or treatment effect

- if \(\Delta > 0 (<0)\), it is the expected increase (decrease) due to the treatment

- if EX and EY exist, \(\Delta = EX - EY\)

- in terms of the location-shift model, \(H_0\) becomes \(H_0: \Delta = 0\)

3.0.5 Rank

u=c(-1,0,2); c( median(u), mean(u), rank(u) );## [1] 0.0000000 0.3333333 1.0000000 2.0000000 3.0000000u[3]=100; u## [1] -1 0 100c( median(u), mean(u), rank(u));## [1] 0 33 1 2 3u[1]=-200; u## [1] -200 0 100c( median(u), mean(u), rank(u));## [1] 0.00000 -33.33333 1.00000 2.00000 3.00000u=c(-1,-1,0,2); rank(u); #?rank## [1] 1.5 1.5 3.0 4.03.0.6 Test Statistic

- Combine the two sample \({X_1,...,X_m}\) and \({Y_1,...,Y_n}\) into one, and tank them from 1 to m+n, low to high

- Let \(R_i\) denote the rank of \(X_i\) in this combined ranking

- The Wilcoxon test statistic is $ W = _{i=1}^{m}R_i$

- where \(min W = 1+ ... + m = m(m+1)/2\)

- max \(W = (n+1) + ... + (n+m) = m(2n+m+1)/2\)

3.0.7 Distribution of W

- \(P(W=k)\) = ?, min \(W\le k \le max W\)

- P(W = minW) = P(W = maxW) = n!m!/(m+n)!

- The Mann-Whitney Statistic

- \(U = \sum_{i=1}^{m}\sum_{j=1}^{n}l_{x_{i}>y_{j}}\)

- The statistic U counts the number of “y before x” predecessors

- \(W = U + \frac{m(m+1)}{2}\)

- min U = 0, max U = mn

3.0.8 Computation

m=4; n=5; mn=m*n

c( dwilcox(0,m,n), dwilcox(mn,m,n), 1/choose(m+n,m))## [1] 0.007936508 0.007936508 0.007936508u=0:mn; plot(u, dwilcox(u,m,n),type="h",las=1)

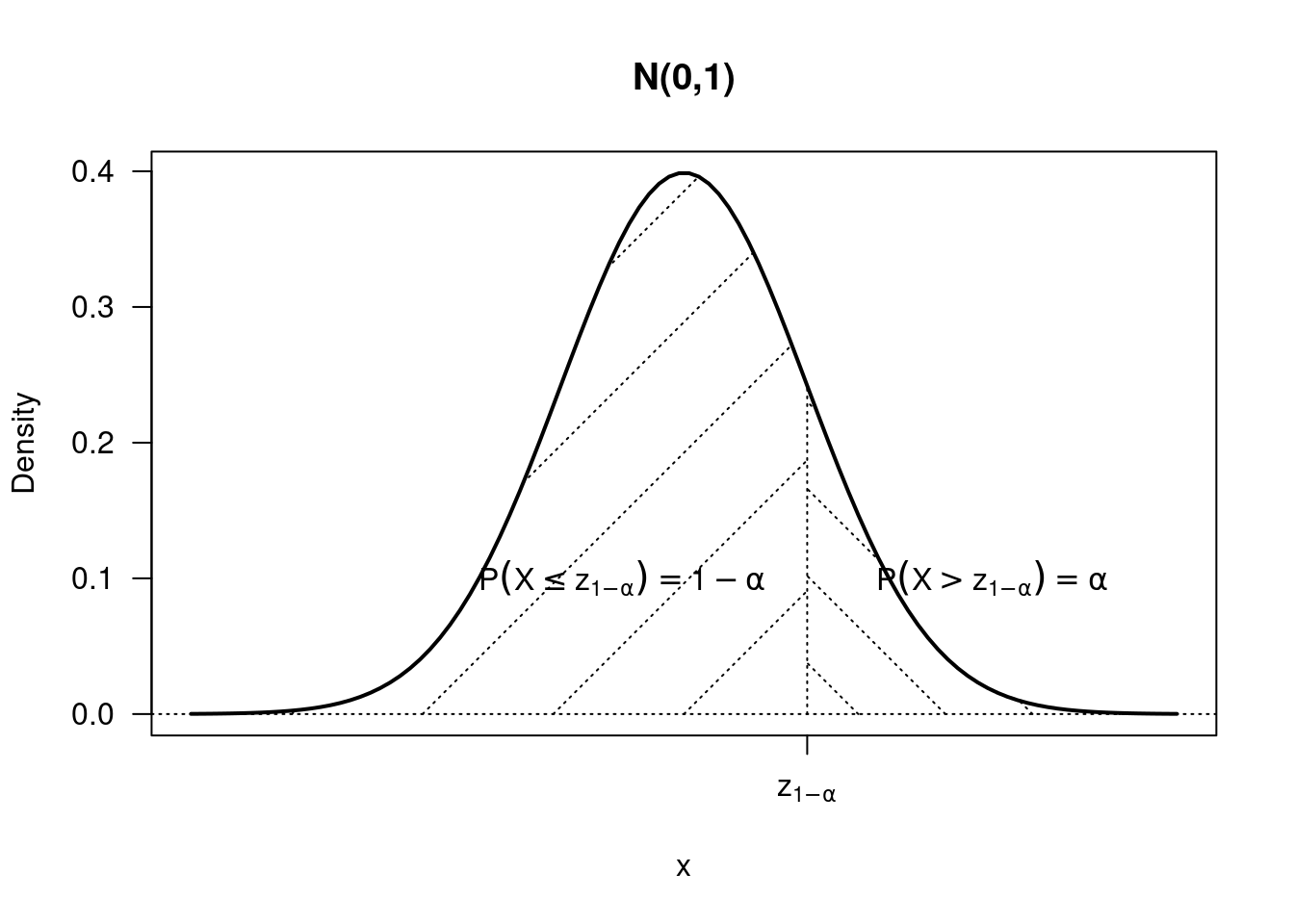

- \(H_0: \Delta = 0\) vs. \(H_1: \Delta > 0\)

- Reject \(H_0\) if \(U\ge ui_{\alpha}\)

- \(H_0: \Delta = 0\) vs. \(H_1: \Delta <0\)

- \(H_0: \Delta = 0\) vs. \(H_1: \Delta \ne 0\)

3.0.9 Example

x=c(0.80, 0.83, 1.89, 1.04, 1.45, 1.38, 1.91, 1.64,

0.73, 1.46); y=c(1.15, 0.88, 0.90, 0.74, 1.21);

oxy=outer(x,y,function(u,v){(u>v)*1})

u=sum(oxy); u## [1] 35pwilcox(u-1,length(x),length(y),lower.tail=F)## [1] 0.1272061wilcox.test(x, y, alternative = "g",conf.int=T);##

## Wilcoxon rank sum exact test

##

## data: x and y

## W = 35, p-value = 0.1272

## alternative hypothesis: true location shift is greater than 0

## 95 percent confidence interval:

## -0.08 Inf

## sample estimates:

## difference in location

## 0.3053.0.10 Hodges-Lehman Estimate

xy=outer(x,y,"-"); median(xy)## [1] 0.3053.0.11 Example

x=c(18.85,16.93,19.29,18.31,17.27,18.64,17.82,19.00,19.58,18.04,17.27,

19.19); y=c(19.23,19.57,19.50,18.64,18.70,19.54,19.04,20.67,20.71,

18.99,19.37,19.06); par(mfrow=c(2,1));

hist(x,xlim=range(x,y)); hist(y,xlim=range(x,y));

wilcox.test(x,y,alternative="less",conf.int=T)## Warning in wilcox.test.default(x, y, alternative = "less", conf.int = T): cannot

## compute exact p-value with ties## Warning in wilcox.test.default(x, y, alternative = "less", conf.int = T): cannot

## compute exact confidence intervals with ties##

## Wilcoxon rank sum test with continuity correction

##

## data: x and y

## W = 26.5, p-value = 0.004672

## alternative hypothesis: true location shift is less than 0

## 95 percent confidence interval:

## -Inf -0.350075

## sample estimates:

## difference in location

## -1.002781wilcox.test(x,y,alternative="less",conf.int=T,exact=F,

correct=T)##

## Wilcoxon rank sum test with continuity correction

##

## data: x and y

## W = 26.5, p-value = 0.004672

## alternative hypothesis: true location shift is less than 0

## 95 percent confidence interval:

## -Inf -0.350075

## sample estimates:

## difference in location

## -1.002781wilcox.test(x,y,alternative="less",conf.int=T,exact=F,

correct=F)##

## Wilcoxon rank sum test

##

## data: x and y

## W = 26.5, p-value = 0.004293

## alternative hypothesis: true location shift is less than 0

## 95 percent confidence interval:

## -Inf -0.3500476

## sample estimates:

## difference in location

## -1.0027813.0.12 Kolmogorov Smirnov

x=rnorm(50)

y=runif(30)

# Do x and y come from the same distribution?

ks.test(x, y)##

## Two-sample Kolmogorov-Smirnov test

##

## data: x and y

## D = 0.58, p-value = 2.381e-06

## alternative hypothesis: two-sided